Final Update: 2025-12-07 (Project Archived)

1. Project Overview

Objective: Create an expandable framework for an ETL (Extract, Transform, Load) pipeline, which can be leveraged for future projects.

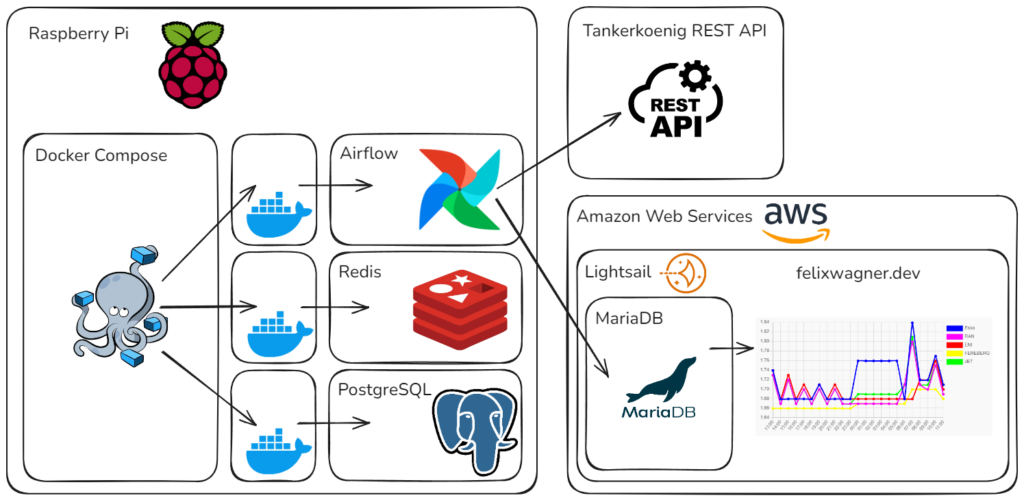

Outcome: Built a framework using Docker and Apache Airflow to extract, transform, and load data. The pipeline sends data to an Amazon Web Service (AWS) database, which powers a website that displays continuously updated data (as seen above).

2. Motivation and Context

Why this Project?

The project was undertaken to gain hands-on experience with widely used technologies in data engineering, such as Docker, Airflow, and AWS. Additionally, the aim was to create a robust ETL framework that could be expanded for future projects.

Practical Use

This project generates an up-to-date graph of gas prices on and hourly basis at various stations across Leutkirch. The information is both intriguing and practical, as gas prices can vary significantly based on the station and time of day. This application provides actionable insights.

3. Architecture and Workflow

Overview of the Architecture

- The pipeline runs on a Raspberry Pi with a Docker Compose file that initializes containers for Airflow, Redis, and PostgreSQL.

- Airflow schedules tasks to fetch data regularly from the Tankerkoenig REST API for five gas stations in Leutkirch (Germany).

- The collected data is sent to an AWS Lightsail MariaDB database.

- The website felixwagner.dev queries the database to display the data as a graph (can be seen at the start of this post).

Technologies Used

- Airflow: Orchestrates the ETL pipeline tasks.

- Docker: Manages containers for Airflow, Redis, and PostgreSQL.

- AWS Lightsail: Hosts WordPress and the MariaDB database.

- WordPress: Powers the website.

- SQL: Queries the relational database.

- REST API: Fetches data from Tankerkoenig.

- Python: Implements the data collection and processing.

- Linux: Hosts and manages the environments on the raspberry, lightsail and the docker images.

4. Implementation Details

Data Sources

- REST API: The pipeline collects gas price data from the Tankerkoenig API, which aggregates information from the German Federal Cartel Office.

- Relational Database: For simplicity, this project uses a single table with the following schema:

ID: Unique identifier for the entry.stationID: Unique identifier for the gas station.stationName: Name of the gas station.E10: Current price of E10 fuel.CreatedAt: Timestamp of the data entry.

Data Processing Steps

- The primary focus was on building the framework, so data transformation requirements were minimal. The retrieved data is formatted into SQL statements for insertion into the database.

- Airflow tasks fetch raw data every hour from the API and inserts it into the database.

Storage and Access

- Data is sent and stored in a MariaDB database hosted on AWS Lightsail.

5. Learning Outcomes

Skills gained

- Docker: Learned how to create and manage Dockerfiles and Docker Compose configurations.

- Apache Airflow: Gained experience in setting up and configuring workflows for task orchestration.

- AWS Lightsail: Explored hosting and managing databases in a cloud environment.

- End-to-End Pipeline Development: Built and integrated all components of an ETL pipeline.

Leave a Reply